Explainable AI (XAI): Making AI More Transparent and Understandable

Explainable AI XAI Making AI More Transparent and Understandable Learn about explainable AI XAI and its importance in making AI more transparent and understandable This article explores the techniques used in XAI and its applications Discover the future of trustworthy AI

: Making AI More Transparent and Understandable.webp)

Understanding the Need for Explainable AI XAI

Alright let's face it AI is getting seriously powerful But how many of us *really* understand how these algorithms make decisions? It's like a black box you feed it data and it spits out an answer But what happens inside? That's where Explainable AI or XAI comes in XAI is all about making AI more transparent and understandable It's about opening up that black box and showing us exactly how the AI arrived at its conclusion

The Importance of Transparency in AI Machine Learning

Why is transparency so important? Well for starters it builds trust If we understand how an AI system works we're more likely to trust its decisions And that's crucial especially when AI is being used in high-stakes situations like healthcare finance or criminal justice Imagine a doctor relying on an AI to diagnose a patient Would you want that doctor to blindly accept the AI's recommendation without understanding why it made that diagnosis? Of course not

Another reason transparency is important is that it helps us identify and correct biases AI systems are trained on data and if that data is biased the AI will be too By understanding how the AI makes decisions we can spot these biases and take steps to mitigate them This is essential for ensuring that AI is fair and equitable for everyone

Key Techniques in Explainable AI XAI Methods

So how do we actually make AI more explainable? There are a bunch of different techniques out there each with its own strengths and weaknesses Here are a few of the most popular:

LIME Local Interpretable Model-Agnostic Explanations

LIME is a cool technique that explains the predictions of *any* machine learning classifier by approximating it locally with an interpretable model Basically it figures out which features are most important for a specific prediction It's like asking the AI "Why did you make *this* particular decision?" and getting a straight answer

SHAP SHapley Additive exPlanations

SHAP is based on game theory and it assigns each feature a value that represents its contribution to the prediction It's like figuring out how much each player on a team contributed to the win SHAP values can help us understand the overall importance of each feature as well as how it affects individual predictions

Rule-Based Systems

These systems use a set of rules to make decisions The rules are easy to understand and interpret making the AI's reasoning process transparent It's like having a flowchart that shows you exactly how the AI arrived at its conclusion

Decision Trees

Decision trees are another type of interpretable model that uses a tree-like structure to make decisions Each node in the tree represents a feature and each branch represents a possible value for that feature By following the branches from the root to a leaf you can see exactly how the AI arrived at its conclusion

Applications of Explainable AI XAI Across Industries

XAI isn't just a theoretical concept it's being used in a wide range of industries to solve real-world problems Here are a few examples:

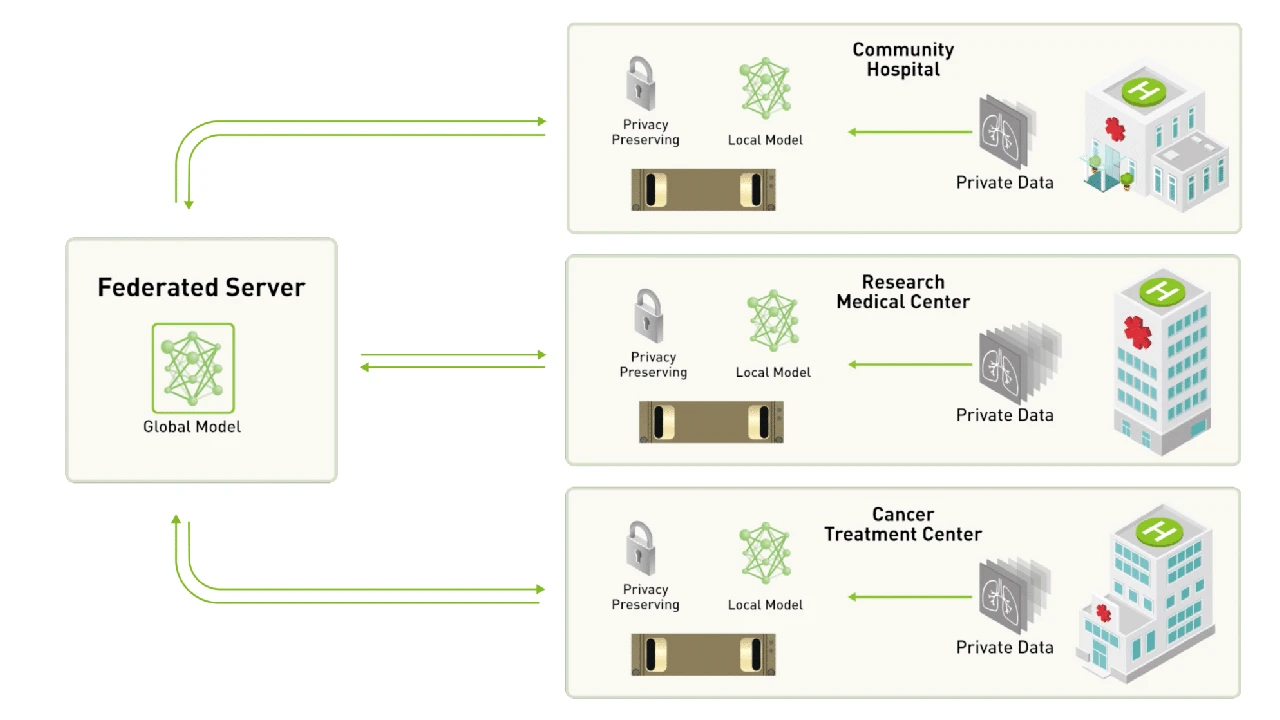

Healthcare AI for Diagnosis and Treatment

In healthcare XAI can help doctors understand why an AI system made a particular diagnosis This can help them make more informed decisions about treatment and improve patient outcomes For example XAI could be used to explain why an AI system predicted that a patient was at high risk for developing a certain disease

Finance AI for Fraud Detection and Risk Management

In finance XAI can help banks and other financial institutions detect fraud and manage risk By understanding how an AI system identifies fraudulent transactions they can improve their fraud detection systems and reduce losses XAI can also be used to explain why an AI system denied a loan application

Criminal Justice AI for Fair Sentencing and Predictive Policing

In criminal justice XAI can help ensure that AI systems are used fairly and equitably By understanding how an AI system makes sentencing recommendations judges can avoid biases and ensure that sentences are proportionate to the crime XAI can also be used to evaluate the effectiveness of predictive policing algorithms

Marketing AI for Personalized Recommendations and Targeted Advertising

In marketing XAI can help companies personalize recommendations and target advertising more effectively By understanding why an AI system recommended a particular product or ad they can improve the relevance and effectiveness of their marketing campaigns

Specific XAI Product Recommendations and Comparisons

LIME Product Recommendation and Comparison

While LIME isn't a product per se it's a technique implemented in various Python libraries such as `lime` itself. It's free and open-source. Think of it as a toolbox rather than a pre-packaged solution. Its strength lies in its model-agnostic nature meaning it can be applied to nearly any machine learning model. However it requires programming knowledge to implement and interpret the results.

Use Case: Explaining why a credit card transaction was flagged as fraudulent.

SHAP Product Recommendation and Comparison

Similar to LIME SHAP is a technique implemented in a Python library called `shap`. Again it's free and open-source. SHAP provides more theoretically sound explanations compared to LIME using Shapley values from game theory. This often results in more consistent and reliable explanations. However it can be computationally expensive especially for complex models.

Use Case: Understanding which factors contributed most to a patient's risk score for a particular disease.

IBM Watson OpenScale Product Recommendation and Comparison

IBM Watson OpenScale is a commercial product designed for monitoring and explaining AI models in production. It offers features like fairness monitoring explainability and drift detection. The pricing varies based on usage and features. It's a good option for enterprise-level deployments where you need a comprehensive platform for managing and explaining your AI models. It provides a user-friendly interface and pre-built explainability tools but comes at a cost.

Use Case: Monitoring the fairness and explainability of a loan application model in a bank.

Alibi Explain Product Recommendation and Comparison

Alibi Explain is an open-source Python library focused specifically on providing explanations for machine learning models. It offers a range of explainability methods including LIME SHAP and others. It's a good choice for researchers and developers who want a flexible and customizable explainability solution. It's free to use and modify but requires more technical expertise to implement.

Use Case: Debugging a computer vision model that's misclassifying images.

Pricing Details

LIME and SHAP libraries are free to use as they are open-source. IBM Watson OpenScale has variable pricing depending on the plan and usage which should be obtained directly from IBM. Alibi Explain is also open-source and free.

The Future of Trustworthy AI and Explainability

XAI is still a relatively new field but it's evolving rapidly As AI becomes more pervasive in our lives the need for transparency and explainability will only grow We can expect to see even more sophisticated XAI techniques emerge in the coming years as well as greater adoption of XAI in various industries

Ultimately XAI is about building trust in AI By making AI more transparent and understandable we can ensure that it's used responsibly and ethically This is essential for realizing the full potential of AI and creating a future where AI benefits everyone

:max_bytes(150000):strip_icc()/277019-baked-pork-chops-with-cream-of-mushroom-soup-DDMFS-beauty-4x3-BG-7505-5762b731cf30447d9cbbbbbf387beafa.jpg)